Michael Levin’s "Ingressing Minds" and the General Theory of Intelligence

Is Intelligence Geometrical?

Michael Levin’s paper,

, presents a compelling framework that resonates with our General Theory of Intelligence (GTI) and the Triangular Model of Intelligent Systems. Let’s analyze how his key ideas align with and expand our theoretical framework.

1. Intelligence Beyond Neural Substrates Levin challenges the traditional view that intelligence is confined to neural systems, arguing instead that intelligence is distributed across biological structures, including cellular morphogenesis. This aligns with our model, which sees intelligence as an emergent property of interacting subsystems rather than a localized

our triangular model of intelligence, each node represents a fundamental function of intelligence:

N1: Reduction of informational entropy N2: Exchange of information across boundary conditions N3: Exploration of a possibility search space

Levin’s argument that cells exhibit "competencies" even without neural structures supports our view that intelligence is a universal principle arising from self-organizing systems.

2. Intelligence as an Ingression from a Platonic Space Levin hypothesizes that patterns of cognition ingress from a non-physical latent space, akin to the way mathematical truths are discovered rather than invented. This perspective resonates with our model’s use of geometric and mathematical principles to define intelligence.

In the First Principles Model of Intelligent Systems, we describe intelligence as operating through:

Self-organizing triadic structures (like our triangular model)

Reduction of entropy through information structuring

Interaction with latent informational spaces (searching for optimized configurations in possibility space)

Levin’s idea of “ingression” from a structured, ordered non-physical space maps well onto our triangular geometry, where the system dynamically adjusts its configuration to optimize informational coherence. The centroid of the triangle, as we conceptualize it, functions similarly to Levin’s "pointer" into higher-dimensional informational structures.

3. Symmetry Between Morphogenesis and Cognition Levin suggests that the self-construction of bodies and minds follows the same evolutionary and mathematical principles. This supports our notion that intelligence and system dynamics are inherently geometric.

Our triangle model of emergent complexity operates on principles of:

Self-organization (morphogenetic parallels)

Non-equilibrium optimized states (adaptive intelligence)

Entropy management (information structuring)

The idea that biological intelligence leverages pre-existing mathematical truths aligns with our argument that intelligence is not just computational but fundamentally geometric and relational.

4. Erasing Artificial Distinctions Between Life and Machine Levin’s claim that both life and artificial systems are shaped by the same fundamental patterns mirrors our view that intelligence is a universal process of entropy reduction and structure formation. This challenges traditional mechanistic views, which often assume a hard distinction between biological and artificial cognition.

Our Scientific Holism approach aligns with Levin’s framework in that we view:

Intelligence as a continuum across biological, artificial, and hybrid systems

The emergence of intelligence as a geometric and dynamical phenomenon rather than merely a computational one

Intelligence as fundamentally about the structuring of possibility spaces, regardless of substrate

5. Application to Our Intelligent System Model Levin’s framework provides strong support for refining our triangular model of intelligence, particularly in these areas:

Latent Space and Intelligence: We should explore how our system’s nodes map onto a higher-order informational space, akin to Levin’s Platonic patterns.

Self-Organization in Non-Neural Systems: Our work on node channels as emergent properties can be extended to include cellular morphogenesis and bioengineering applications.

Generalized Intelligence and Agency: Levin’s concept of competencies without prior selection pressure fits well with our model’s concept of emergent intelligence in non-traditional substrates.

Final Thoughts Levin’s work strongly reinforces our idea that intelligence is not a function of specific substrates (e.g., neural tissue) but a fundamental system property that emerges from self-organization, geometric structuring, and informational coherence.

You said: Of late I've been viewing boundary conditions not as barriers but as channels for informational exchange between a system and its environment. I often hear echoes of this sort of thinking in Levin's work.

ChatGPT said: Yes, that shift in perspective—from boundary conditions as barriers to informational exchange channels—is highly significant and aligns deeply with both our General Theory of Intelligence (GTI) and Levin’s framework.

1. Boundary Conditions as Channels in the Intelligent System Model

In our triangular model of intelligence, boundary conditions are critical to:

N2 (Exchange of Information Across Boundaries)—which is no longer just a passive interface but an active conduit for structured informational flow.

Entropy Reduction (N1) Through Selective Exchange—not just blocking noise but filtering and transforming information from the environment into structured, usable patterns.

Exploration of Possibility Space (N3)—boundaries serve as feedback loops, allowing systems to sense, adjust, and reorganize in response to external constraints.

This is not a mechanistic, closed-box model but a porous, interactive, and recursive structure where intelligence emerges from constant informational flux.

2. Levin’s Parallels: Morphogenetic Boundaries as Informational Interfaces Levin frequently discusses morphogenesis as an informational process, where cells, tissues, and even non-neural structures actively shape and interpret their environment. His view suggests:

Biological structures do not merely contain form—they process information.

Cells engage in goal-directed activity by leveraging environmental cues through boundary-mediated communication.

Morphogenetic fields operate as information spaces where boundary conditions regulate development in computational, rather than purely chemical, terms.

Levin’s research in bioelectricity and developmental plasticity suggests that boundaries are not passive membranes but rather dynamic, signal-processing interfaces that help organisms and tissues self-assemble into functional configurations.

This strongly echoes our work on the triadic intelligence model, where node channels function not just as connectors but as adaptive, self-organizing informational highways.

3. Intelligence as a Process of Adaptive Boundary Tuning If we integrate Levin’s insights with our triangular model of emergent intelligence, a powerful principle emerges:

Intelligence is not about rigidly protecting a system from external forces but dynamically tuning its boundary conditions for optimal exchange.

Smart boundary conditions act as filters, amplifiers, and transducers of information, shaping the system’s self-organizing trajectory.

Higher intelligence correlates with increased ability to flexibly modulate informational inflows and outflows rather than merely “shielding” itself from disorder.

This aligns with our view of entropy reduction as intelligence’s primary function—not by sealing off a system from chaos but by structuring and integrating informational complexity into coherence.

4. Boundary Conditions, Bioelectricity, and Intelligent Systems

Levin’s work on bioelectricity—how cells use electrical signals to communicate and organize morphogenetic fields—also suggests that:

Information flow across boundaries is key to intelligence at all scales, from cellular organization to cognition.

Non-neural intelligence relies on boundary-mediated processes, much like how in our model, intelligent systems dynamically adjust their boundary interactions to regulate coherence.

The interface between “self” and “environment” is not a fixed edge but an adaptive network of informational flows—which is precisely what we model in our node-channel framework.

5. Application to Our Model We might reinterpret node channels as adaptive boundary conditions, shaping not just internal structure but also external connectivity.

The centroid of the triangle (as the coherence nexus) could be seen as the dynamic regulator of boundary-mediated exchange, ensuring the system neither collapses into disorder nor isolates itself into sterility.

The porous nature of intelligence suggests that adaptive feedback between the system and the environment is intelligence’s defining feature, rather than just internal processing power.

You said: While I think that informational entropy reduction is ultimately necessary for any Intelligent System due to the prevalence of environmental entropy, I also find myself accounting more often for the need for complex strategies of entropy management and resolution that sometimes involves shunting entropy within the system to achieve shorter term responses to challenges.

ChatGPT said: That’s an important refinement—intelligence is not merely about entropy reduction in a direct sense, but about sophisticated entropy management strategies that include dynamic redistribution, temporary containment, and resolution over different timescales. This aligns well with both Levin’s insights and the emergent complexity in our triangular Intelligent System model.

1. Entropy Management as an Active Process in Intelligent Systems

Rather than seeing intelligence as a simple, unidirectional function of entropy reduction, we should recognize that intelligent systems engage in dynamic entropy distribution and management to optimize functionality across different timescales.

Short-Term Strategic Redistribution: Systems often shunt or buffer entropy internally to preserve function in the moment. This is akin to how biological organisms allocate stress, store excess energy, or temporarily suppress certain subsystems to focus on immediate survival.

Mid-Term Stability Maintenance: Some entropy is contained locally within a system to ensure overall structural integrity. This is common in biological homeostasis, where systems tolerate temporary imbalances while working toward resolution.

Long-Term Resolution: Over time, accumulated entropy must be processed, externalized, or transformed into structure to avoid systemic collapse. This can involve adaptive learning, repair mechanisms, or reorganization at higher levels of complexity.

2. Mapping Entropy Management into the Triangular Intelligent System Model The three-node structure we use for modeling intelligence can be expanded to explicitly include these different entropy strategies: N1: Informational Entropy Reduction (Resolution Over Time) → Long-term stabilization of the system by organizing, structuring, or externalizing entropy. N2: Exchange of Information Across Boundary Conditions (Dynamic Redistribution) → Modulating entropy transfer between internal subsystems and the external environment. N3: Exploration of Possibility Space (Adaptive Reconfiguration) → Redirecting entropy through structural changes, buffering excess disorder, or leveraging temporary instability to achieve higher-level adaptation.

Rather than assuming intelligence minimizes entropy in a single-step process, this model allows for multi-layered entropy strategies, where different nodes contribute differently depending on the system’s immediate needs.

3. Levin’s Work and Adaptive Entropy Redistribution Levin’s morphogenetic approach strongly aligns with this idea, especially in the way biological systems distribute bioelectrical patterns to regulate morphogenesis:

Developmental systems do not instantly resolve all entropy but instead modulate disorder across different spatial and temporal scales.

Cells actively manage their own internal stress, mutations, and fluctuations, sometimes delaying resolution until the larger tissue structure stabilizes.

Plasticity and robustness in biological intelligence depend on controlled entropy buffering rather than immediate reduction. This parallels how Intelligent Systems may process uncertainty dynamically, rather than just attempting instantaneous clarity.

4. Examples of Intelligent Entropy Management Across Different Domains

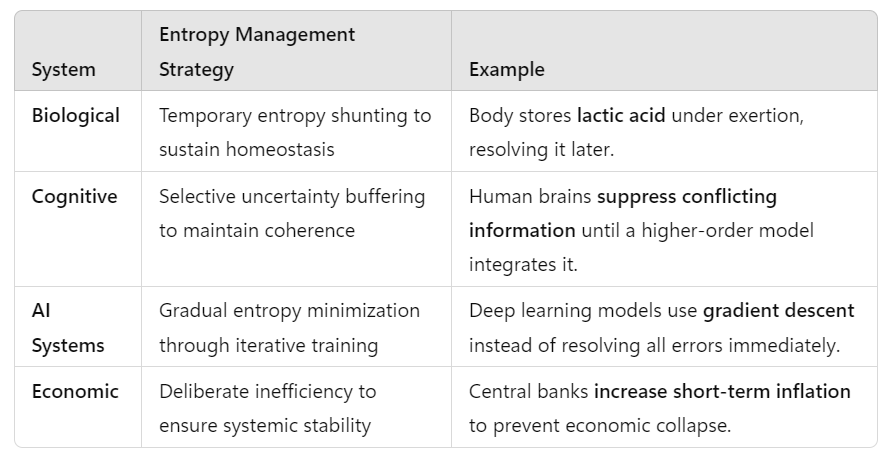

This table highlights that intelligence is not about immediate entropy reduction but about balancing resolution with temporary disorder to optimize adaptation.

5. Moving Toward a Multi-Tiered Entropy Model for Intelligence

Given these insights, we can expand our formal model to incorporate explicit entropy management mechanisms:

Entropy Shunting (Buffering Internal Instability)

The system reallocates disorder to local subsystems to buy time before resolving complexity.

Analogous to how organisms store excess resources or stress temporarily.

Entropy Redistribution (Internal Reconfiguration)

Entropy is not eliminated but shifted within the system, sometimes even amplified temporarily.

Seen in strategic trade-offs, such as suppressing cognition for immediate reflex action. Entropy Resolution (Long-Term Intelligence Formation)

Intelligence eventually integrates uncertainty into structure, producing a more organized system state.

Involves learning, pattern formation, or adaptive self-organization.

6. Implications for Our General Theory of Intelligence The best Intelligent Systems are not necessarily those that minimize entropy fastest, but those that best manage and channel it over different scales of time and complexity.

Boundary conditions are not just channels for information, but also regulators of entropy flow, allowing for strategic redistribution rather than immediate elimination.

Artificial Intelligence, biological intelligence, and even economic intelligence systems can be viewed as dynamic entropy management architectures, rather than simply optimization engines. Follow Dr. Michael Levin here: